Currently working on my e107 Importer plugin , I was confronted today with badly-encoded data coming from my databases.

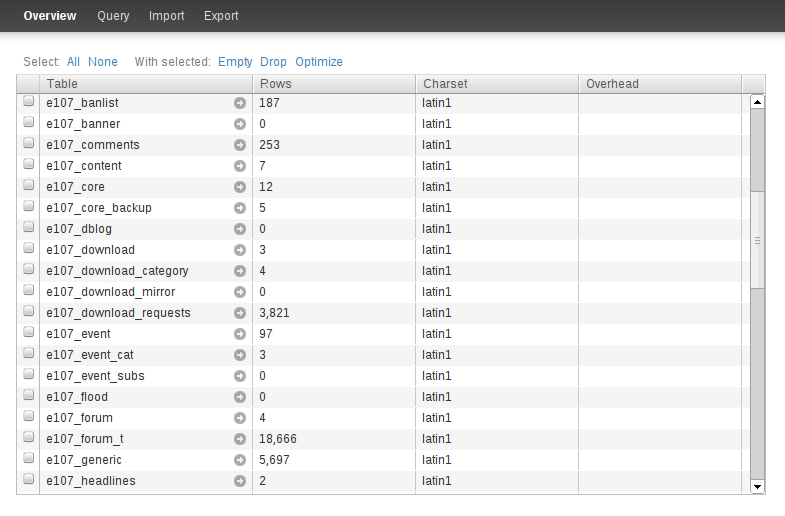

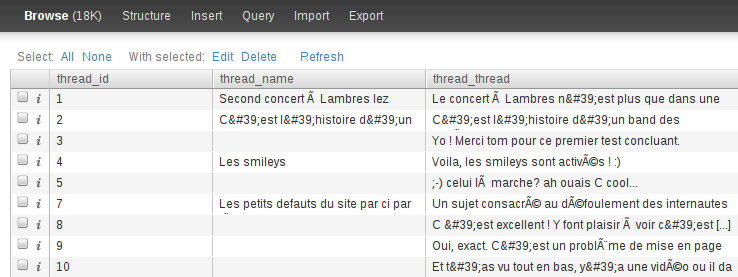

e107 migrated to full UTF-8 years ago , but I must have messed the upgrade process at the time. That was my conclusion when I took a close look to my tables: all of them seems to be set to Latin-1 but contain UTF-8 data. Here are screenshots from SQLBuddy (a great light-weight MySQL manager) showing just that:

To fix this, I first tried to use the following command I found on the web :

$ mysql --database=e107db -B -N -e "SHOW TABLES" | awk '{print "ALTER TABLE", $1, "CONVERT TO CHARACTER SET utf8 COLLATE utf8_general_ci;"}' | mysql --database=e107db

But this doesn’t work, as it not only change the encoding of the table, but also transcode the data inside the table.

Let’s try something else. First, we’ll export the database to a dump file, of which the encoding is forced to Latin-1:

$ mysqldump -a -c -e --no-create-db --add-drop-table --default-character-set=latin1 --databases 'e107db' > ./e107-data.sql

Now the trick is to change the

CHARSET

parameter of all

CREATE

TABLE

directives to UTF-8:

$ sed -i 's/CHARSET=latin1/CHARSET=utf8/g' ./e107-data.sql

We’ll also change the

NAMES

directive to force MySQL to handle imported data as UTF-8:

$ sed -i 's/SET NAMES latin1/SET NAMES utf8/g' ./e107-data.sql

Then we’re free to import the result in a new UTF-8 database:

$ sed -i 's/USE `e107db`;/#USE `e107db`;/g' ./e107-data.sql

$ mysql --execute="CREATE DATABASE e107db_new CHARACTER SET=utf8"

$ mysql --database=e107db_new < ./e107-data.sql

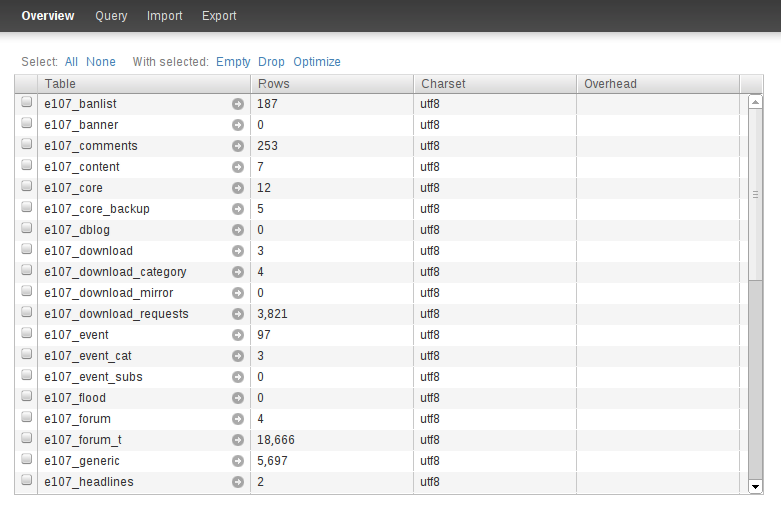

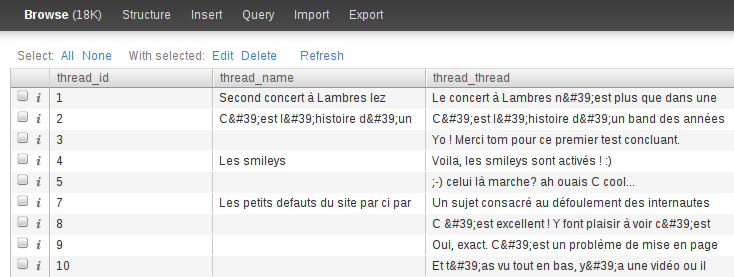

And now, accentuated characters appears as they should in our database, meaning we’ve fixed all the mess! :)

PS: I

found another alternative method

(look at the end of the linked page) which consists of temporarily handling

TEXT

fields as

BLOB

, to have MySQL treat them as binary content (thus skipping character transcoding). Haven’t tested this but sounds tricky.