I’ve just realized I’ve never really finished the series of posts I started in 2010 , a series about my longest video project: the biggest concert we performed with Cool Cavemen in 2009.

Cool Cavemen is working right now on a new album , which means we’re approaching the end of the Multipolar -era. This is the right time to dig out what is, without a doubt, the video condensing all the spirit and intensity of the period. Look at those dancing people! Look how they like our music! Look how musicians have fun on stage! :)

https://www.youtube.com/watch?v=qE-bis-wYxs&list;=SP4BAA557B7144031F

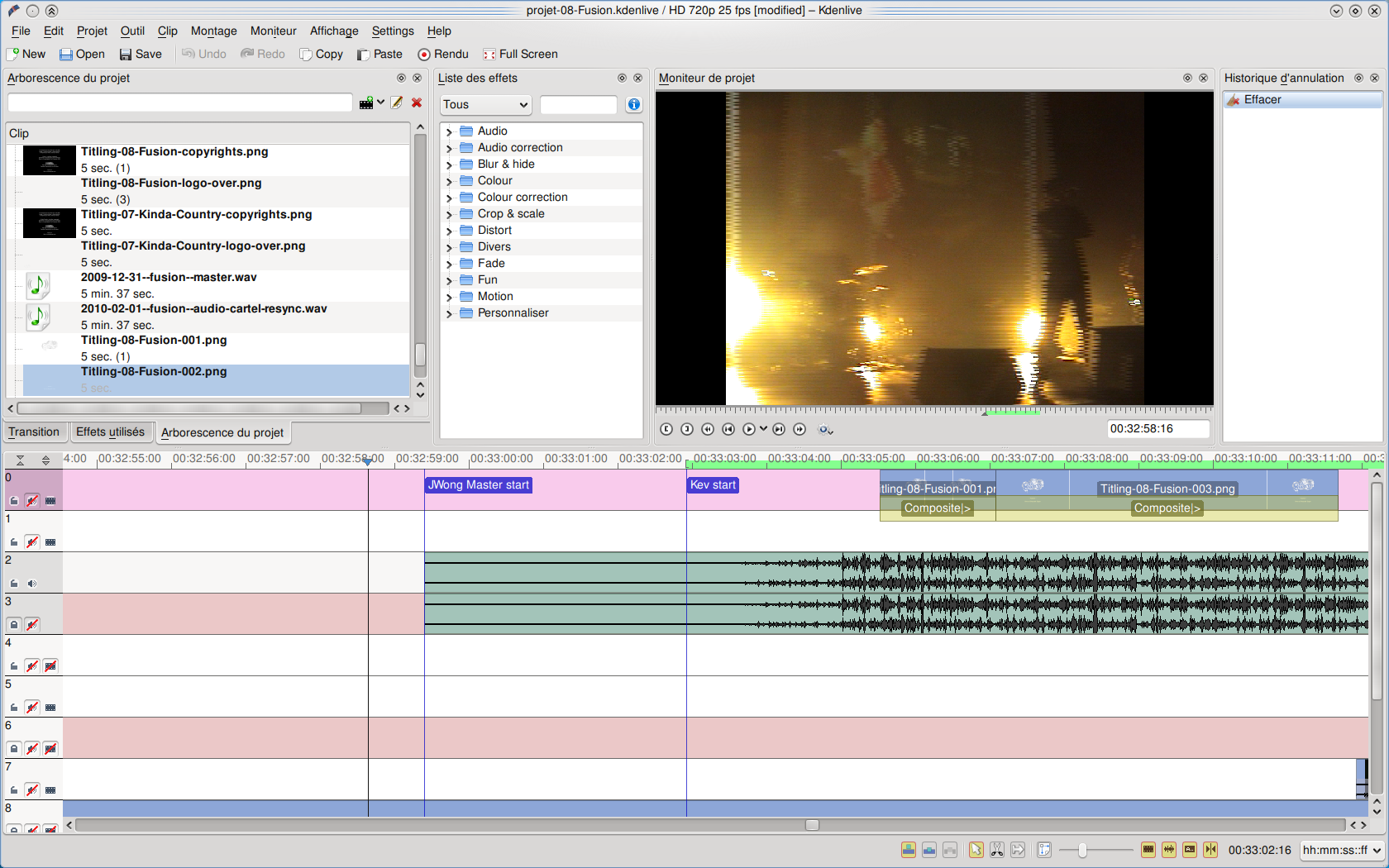

By gathering all kinds of footage from the event, I was able to generate this 1-hour coverage of the concert. It took me the whole year of 2010 to edit and publish it, one song at a time. This delay was mostly due to lacks of time, an unstable MacBook and numerous Kdenlive crashes, all of these forcing me to re-create and redo my projects from scratch several times ( sigh ).

Lighting Design ¶

I not only edited this video. I also was in charge of the stage lighting design of the concert:

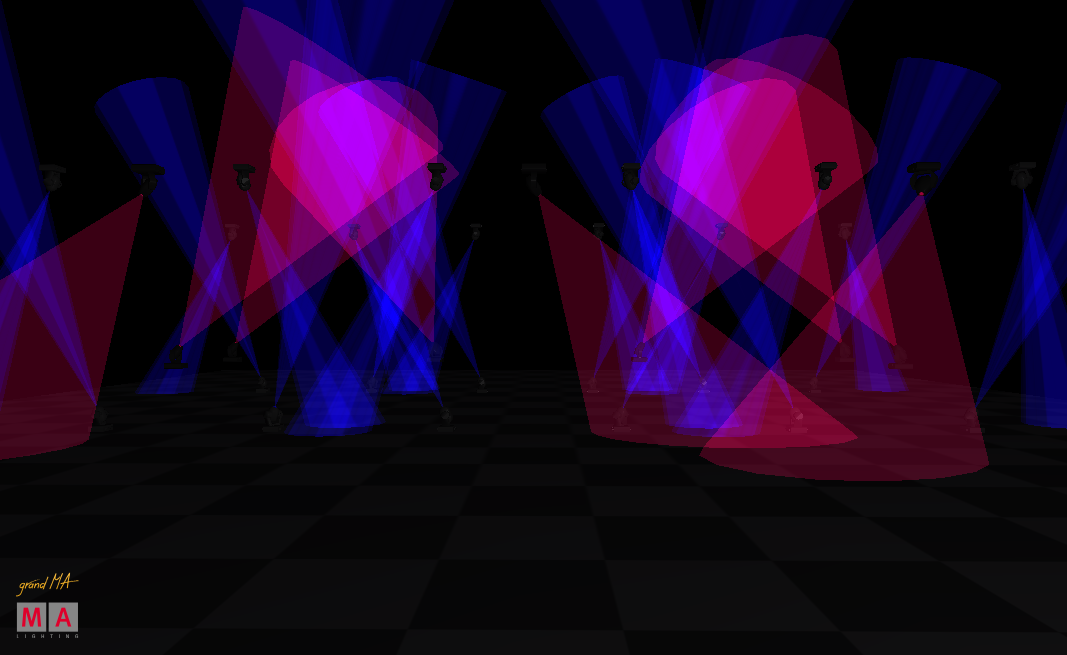

It was the first time I had so much gear to work with (mostly Martin Mac-2000 and Mac-700 ), including the full-size version of the Grand-MA v1 lighting console . A week before the show, I played with GrandMA’s emulator to get a glimpse of that desk’s philosophy.

But this little training is not enough to get used to the GrandMA, let alone master it. So when it was time to play live, I choose simple lighting patterns and movements. Of course I made a lots of mistakes and the result was far from perfect, but it was good enough to keep the show running. Considering these conditions, my performance was a success! :)

And I had a secret weapon: I knew I’ll be the one editing the video. Being both the lighting designer and the video editor, I was able to hide all the things that didn’t fell right, and fix all the timing issue after the facts.

Synchronization ¶

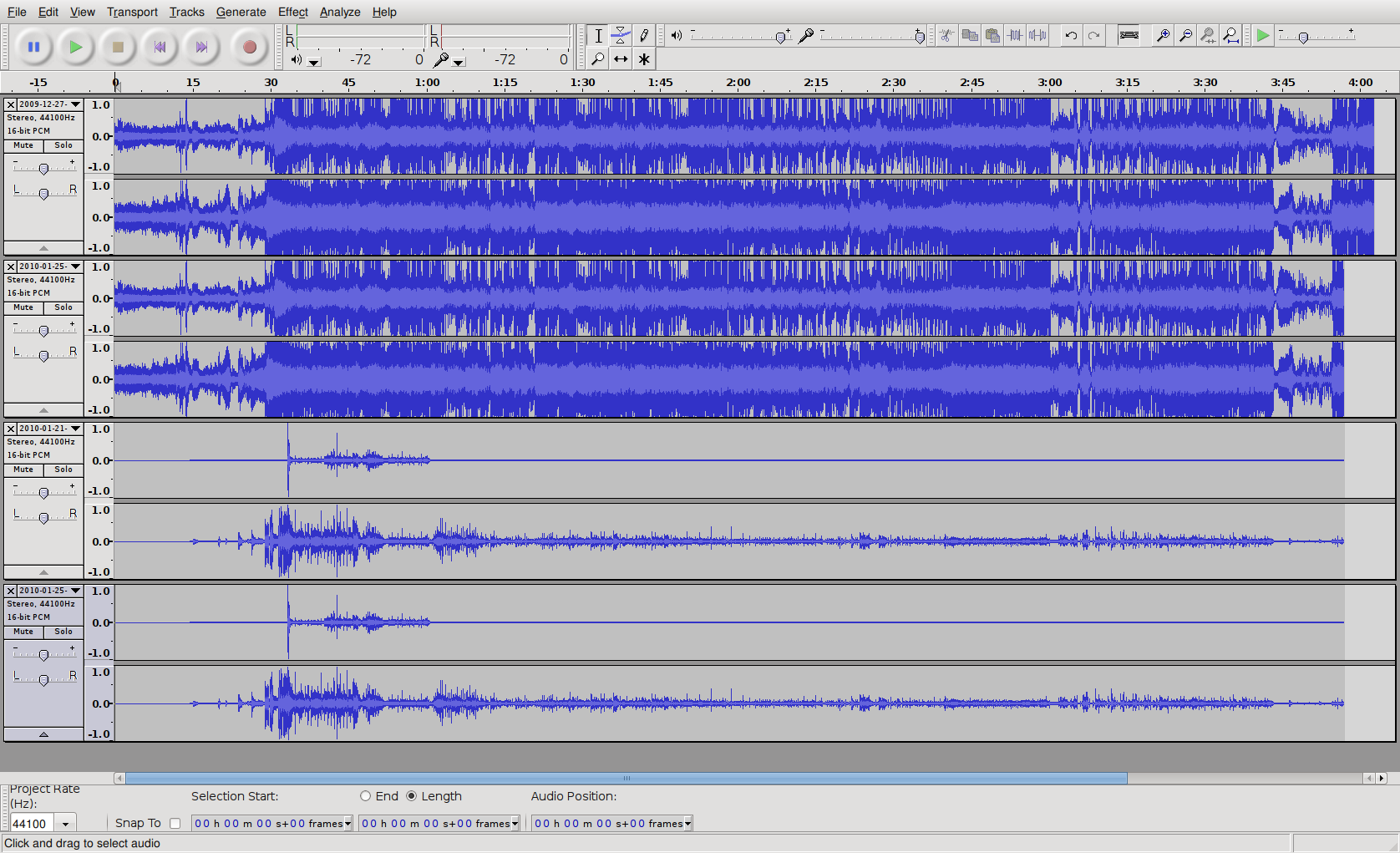

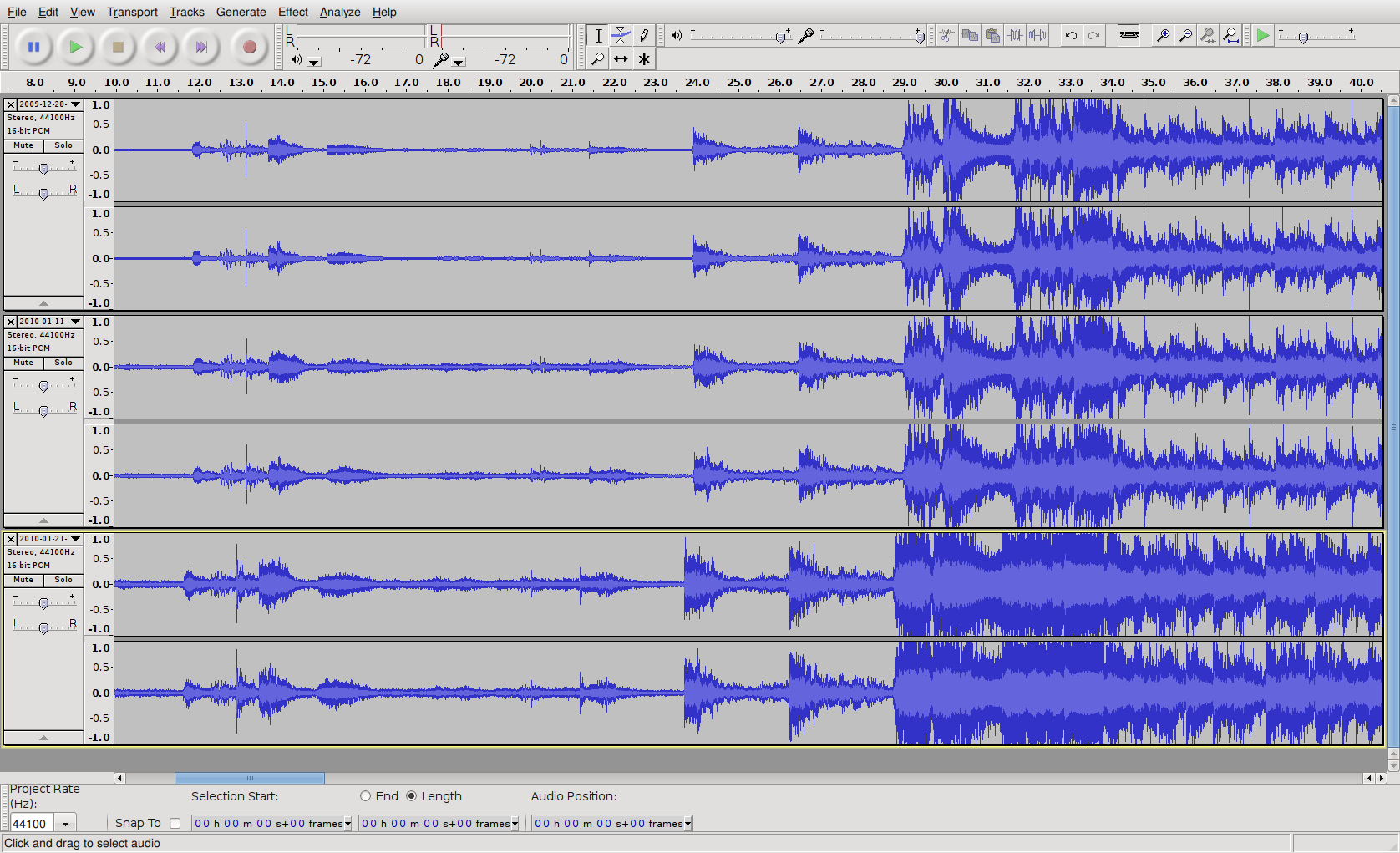

The audio is a multitrack recording taken directly from the front of house mixing console, and saved on a MacBook :

The raw recording was later remixed by Thomas of the SoundUp Studio .

Using different software for audio and video editing, proved to be challenging. And we were worried about the effect of bad synchronization. After some research, it looks like humans tolerate an error below 100ms:

100 ms being the limit under which the temporal gap between audio and video cannot be noticed.

Philippe Owezarski (LAAS-CNRS), Enforcing Multipoint Multimedia Synchronisation in Videoconferencing Applications

Now that we have our error margin, we need a workflow. We managed to design one based on a reference track extracted from the camera recording:

-

First, Thomas start to work on a song. When he has something to show us, he down-mix its Cubase project and export intermediate results under the name

2010-01-29--igor--audio-desync.wav. This allow all band members to give feedback.

-

Once the final mix of the song is validated, I export the audio reference from my video edit (i.e. the plain recording from the cameras) under the name

2010-02-15--igor--audio-ref.wav. We use this file as the reference audio track. -

Then, Thomas shift in time the

2010-01-29--igor--audio-desync.wavfile to precisely match the2010-02-15--igor--audio-ref.wavreference file, and save the result under the name2010-02-16--igor--audio-sync.wav. This is the file I import in Kdenlive and align with my video using the reference track.

Before using that workflow on all our tracks, we checked it was not introducing delays. Unfortunately, I detected some:

I introduced them when I tried to get rid of video timecode artifacts . I messed with encoding parameters in Avidemux, and introduced delays. When I realized I could just use the crop filter in Kdenlive, instead of removing the timecode in an external software, I produced perfect timing. That’s another big lesson of that project: stay in Kdenlive.